TABARD: a novel benchmark for TABular Anomaly analysis, Reasoning and Detection

We study the capabilities of large language models (LLMs) in detecting fine-grained anomalies in tabular data. Specifically, we examine: (1) how well LLMs can identify diverse anomaly types—including factual, logical, temporal, and value-based errors; (2) the impact of prompt design and prompting strategies; and (3) the effect of table structure and anomaly type on detection accuracy. To this end, we introduce TABARD, a new benchmark constructed by perturbing tables from WikiTQ, FeTaQA, Spider, and BEAVER. The dataset spans multiple domains and eight anomaly categories, including paired clean and corrupted tables. We evaluate LLMs using direct, indirect, and Chain-of-Thought (CoT) prompting. Our results reveal notable limitations in standard prompting, especially for complex reasoning tasks and longer tables. To overcome these issues, we propose a unified framework combining multi-step prompting, self-verification, and constraint-based rule execution. Our approach significantly improves precision and recall, offering a promising direction for robust and interpretable anomaly detection in tables.

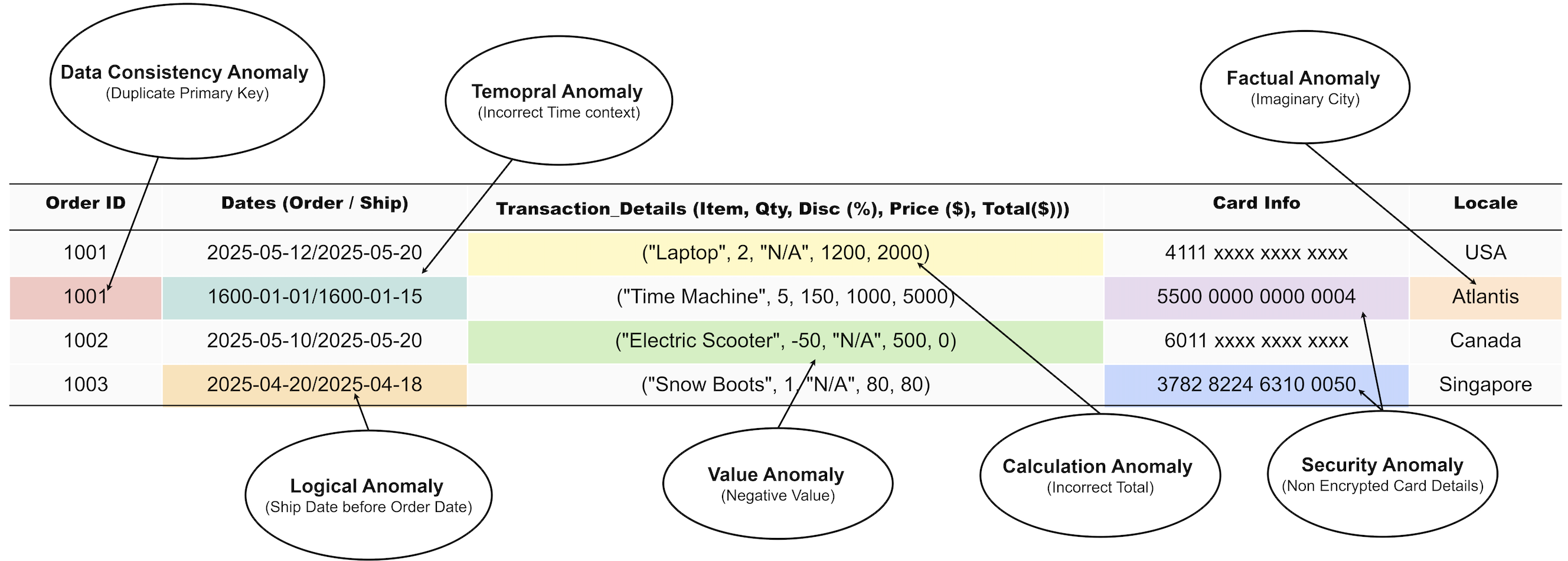

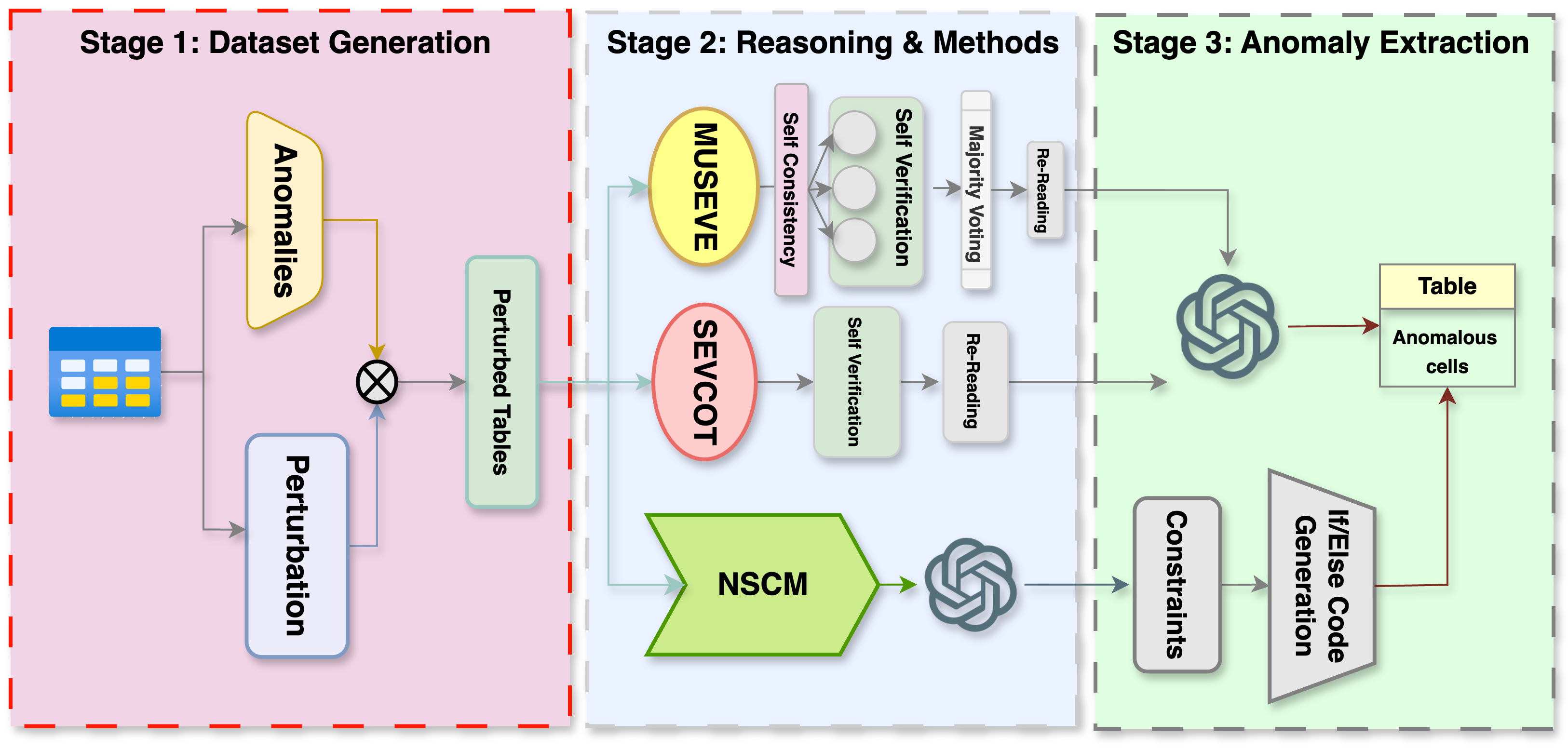

TABARD is built by perturbing clean tables from public datasets such as WikiTQ, FeTaQA, Spider, and BEAVER. Each table was modified with category-specific prompts to inject targeted anomalies (e.g., logical errors, temporal inconsistencies, miscalculations), while preserving the overall structure. This process ensures a controlled yet diverse benchmark covering eight anomaly categories.

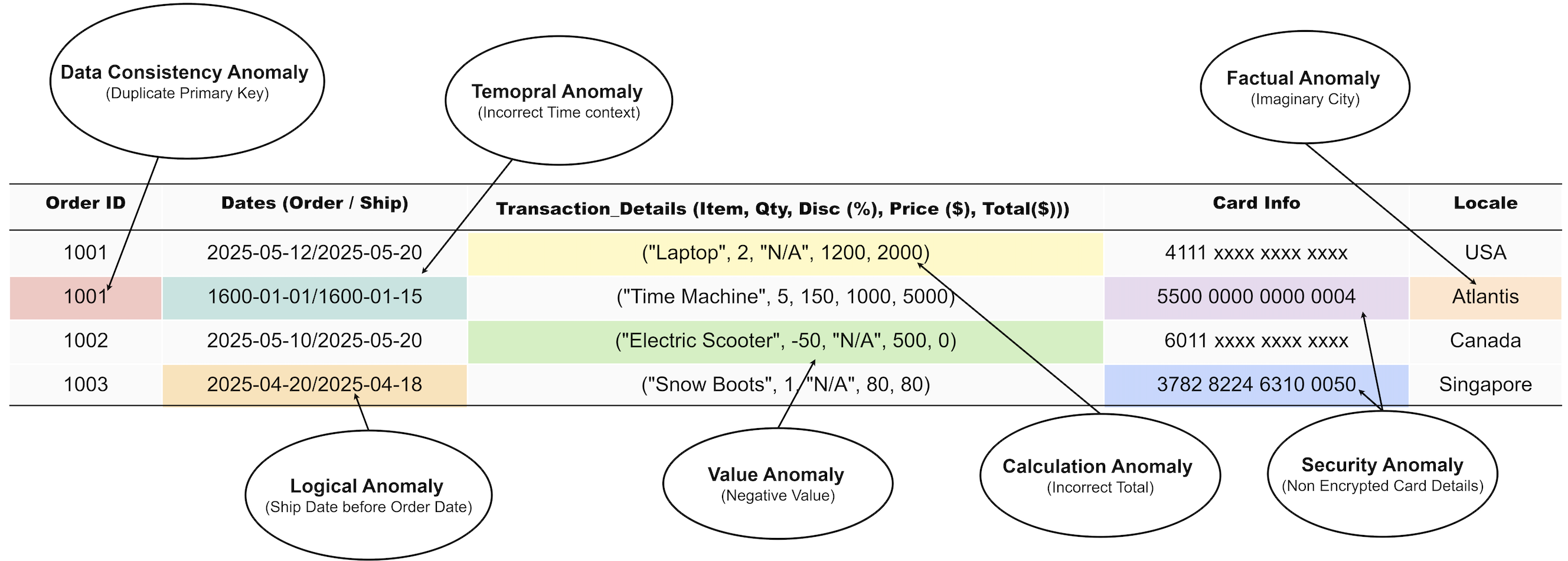

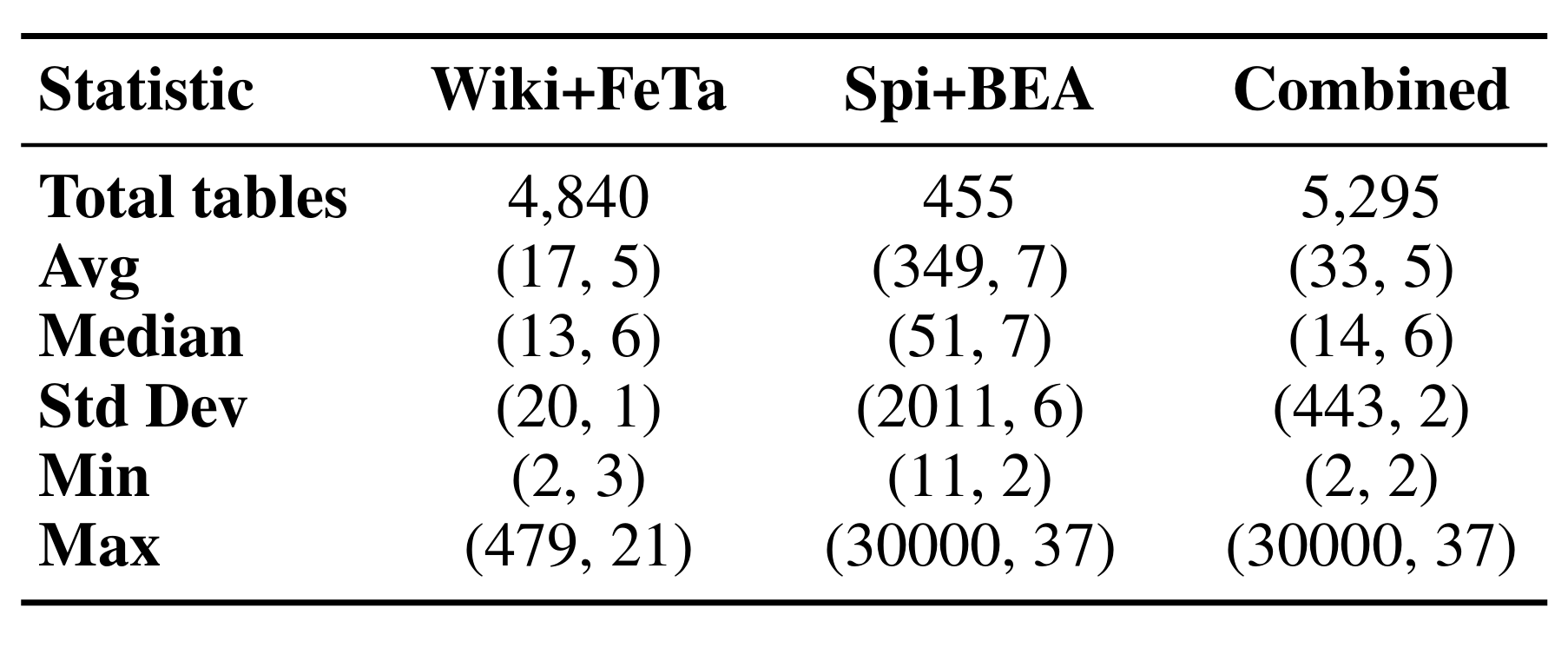

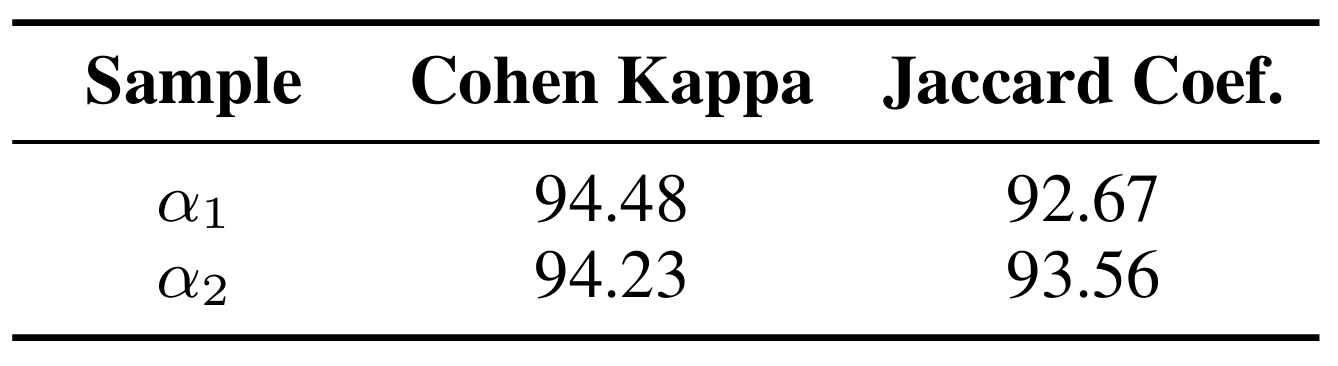

The dataset includes paired clean and corrupted tables spanning multiple domains. Manual verification was conducted on a representative subset, yielding strong inter-annotator agreement (κ ≈ 0.94). Below are summary tables illustrating dataset scale and verification quality.

Table 1: Dataset statistics.

Table 2: Verification results.

Alongside baseline prompting strategies, we introduce three new methods designed to improve anomaly detection performance and interpretability:

Together, these methods outperform standard prompting by combining structured reasoning, self-checking, and executable constraints, offering more robust and interpretable anomaly detection in tables.

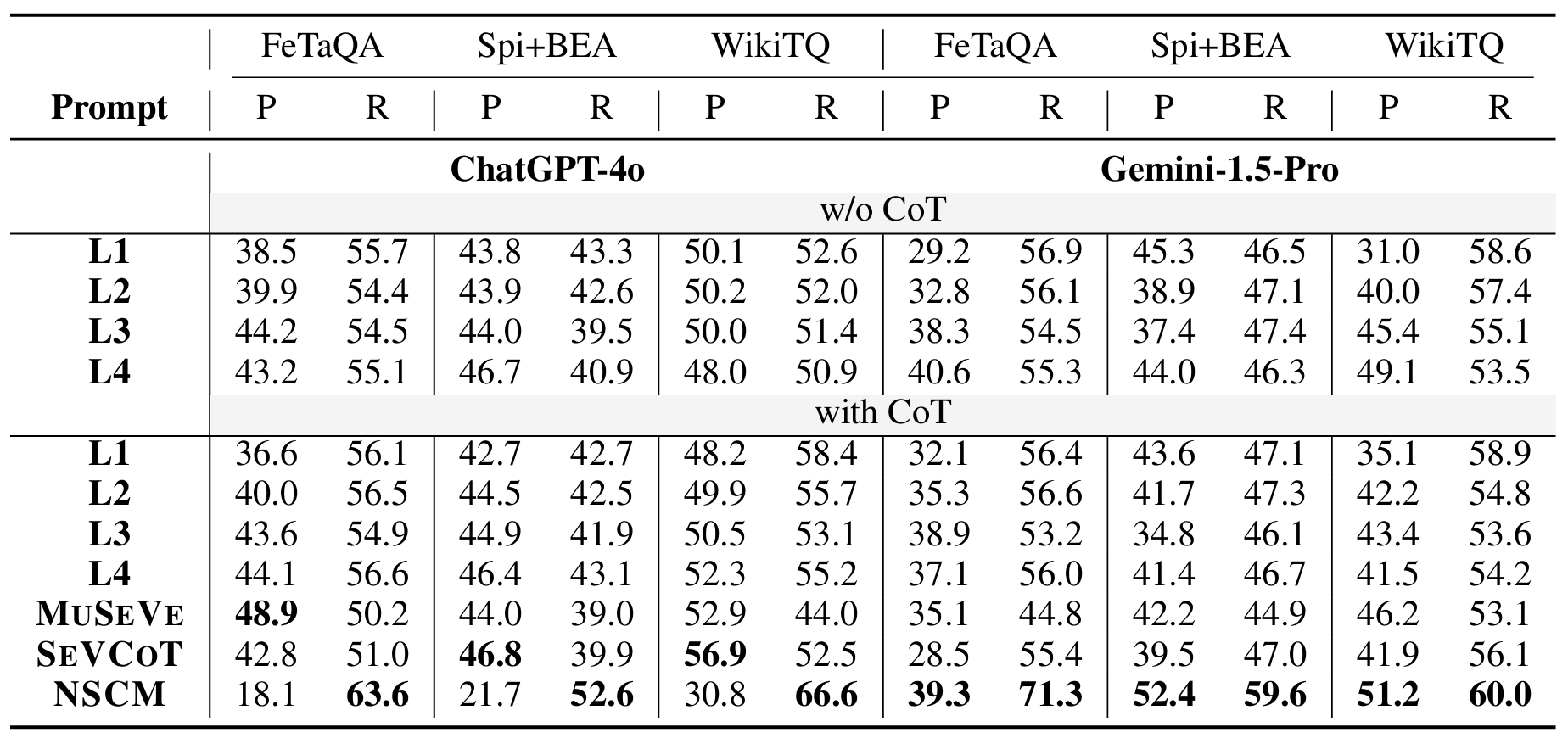

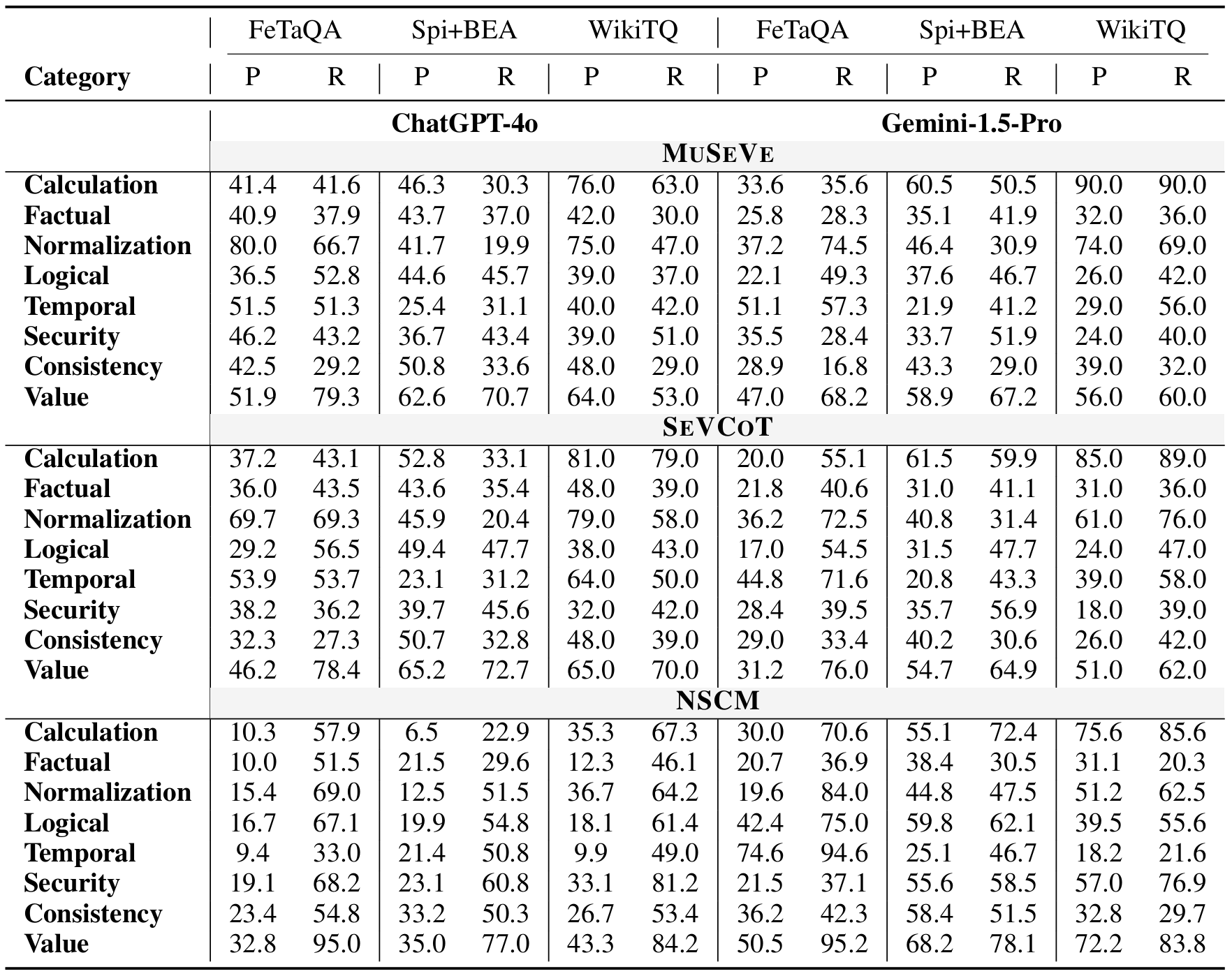

Our evaluation highlights clear limitations of standard prompting for anomaly detection in tables. Table 3 shows that accuracy drops significantly on longer or more complex tables, with Chain-of-Thought reasoning (CoT) yielding modest improvements. Table 4 compares baseline prompting with our proposed methods — MUSEVE, SEVCOT, and the neuro-symbolic constraint method (NSCM). These approaches achieve higher precision and recall, offering more robust and interpretable detection than conventional prompting.

@inproceedings{tabard2025,

title={TABARD: A Novel Benchmark for Tabular Anomaly Analysis, Reasoning and Detection},

author={Choudhury, Manan Roy and Iyengar, Anirudh and Siingh, Shikhhar and Sugeeth, Raghavendra and Gupta, Vivek},

booktitle={ACL ARR May},

year={2025},

note={Preferred venue: EMNLP. URL: https://openreview.net/forum?id=8Y1G4XD3vR}

}